Quick Tip: Dumb fix for "ORA-26086: direct path does not support triggers" in Azure Data Factory or Azure Synapse Analytics Pipelines

- General

- May 9, 2023

Table of Contents

You should already know that in my opinion, due to my extended experience, Larry Ellison has an explicit agenda to make your life as miserable as possible and locking you in while you use Oracle products.

The latest of the issues I had while moving data around to Oracle using ADF (or really, I’m using Azure Synapse Pipelines, but it’s literally the same thing) is the following error while I was trying to use a copy data activity to move data from a table into another:

Operation on target Copy Staging to XXX failed: Failure happened on 'Sink' side. ErrorCode=UserErrorOdbcOperationFailed,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=ERROR [HY000] [Microsoft][ODBC Oracle Wire Protocol driver][Oracle]ORA-26086: direct path does not support triggers

Error in parameter 1.,Source=Microsoft.DataTransfer.ClientLibrary.Odbc.OdbcConnector,''Type=Microsoft.DataTransfer.ClientLibrary.Odbc.Exceptions.OdbcException,Message=ERROR [HY000] [Microsoft][ODBC Oracle Wire Protocol driver][Oracle]ORA-26086: direct path does not support triggers

Error in parameter 1.,Source=msora28.dll,'

What’s going on here pal? I’m just trying to copy like a couple hundred rows, ain’t much but it’s honest work, why are you stopping me?

Turns out, ADF is rightfully trying to insert the rows in bulk, but Oracle doesn’t like it when the sink table has triggers, falling back to row by row insertion seems it’s too much to ask, so you end up with the error. Searching on the good ‘ol wide web you’ll encounter this solution , that basically tells you to disable bulkload for the whole Oracle connection by setting EnableBulkLoad=0 in the connection string. That wouldn’t work for me, because I’m bulk loading just fine everywhere else, so either I had to suffer slower performance on all the other inserts by disabling the bulk insert, or I had to create an additional and separate Linked Service to Oracle with the bulk insert disabled: doable but adding maintenance overhead when things change (two secrets to manage instead of one).

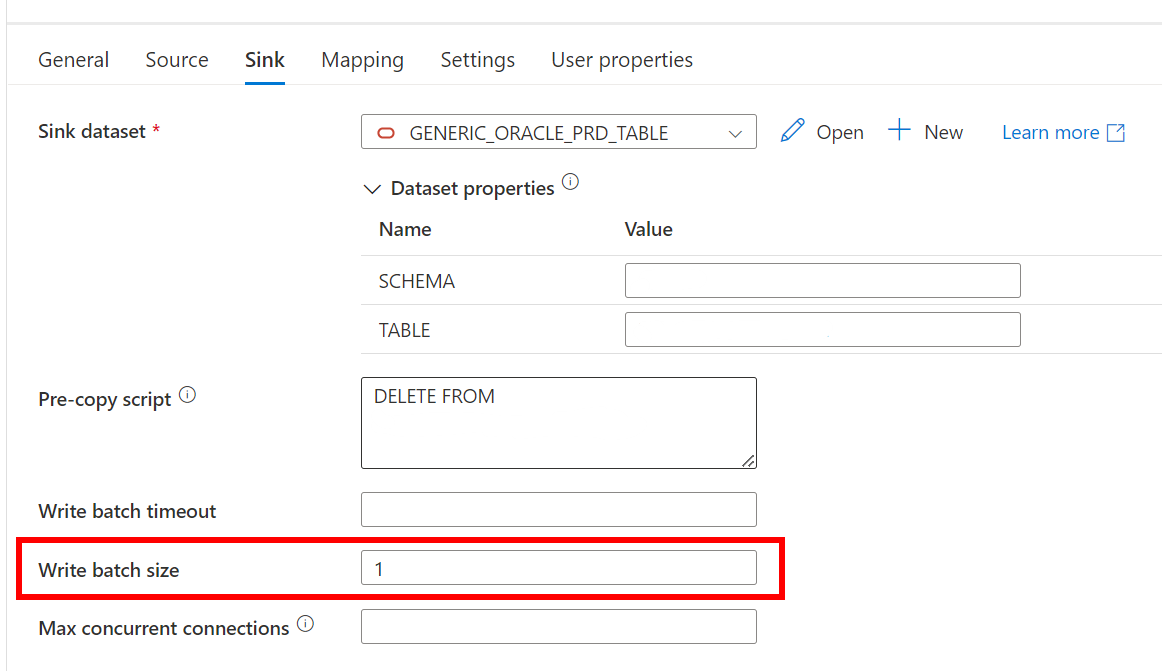

My solution? Super dumb. Is it complaining that it can’t do bulk inserts? Fine, I’ll send the rows one by one:

By setting Write Batch Size to one, it’s not a batch insert anymore, and you get the same behaviour of disabling the batch insert in the connection string, without having to do so; in this way you can still bulk insert in some tables, and disable the bulk insert in tables with triggers, without having to use a different connection.

You’re welcome.